When it comes to expanding your business in Colombia, having a targeted and reliable Colombia Email List is crucial. At Emailproleads.com, we offer top-notch email lists tailored to your specific requirements, ensuring your marketing efforts reach the right audience effectively.

In today's digital age, having access to a targeted Colombia email list can significantly elevate your marketing efforts. At EmailProLeads, we specialize in providing high-quality email lists that empower businesses to connect with their desired audience effectively. If you're wondering how an email list can transform your outreach strategy, you're in the right place.

At Emailproleads.com, we pride ourselves on offering a wide array of Colombia email lists, ranging from B2B and B2C databases to industry-specific and geographically targeted lists. Our commitment to providing accurate, up-to-date, and legally compliant data sets us apart as a leading email list provider in Colombia. With our resources, you can enhance your marketing strategies, connect with the right audience, and drive your business towards unparalleled success.

What is a Colombia Email List?

Unlock Business Opportunities with our Colombia Email List - Trusted by Emailproleads!

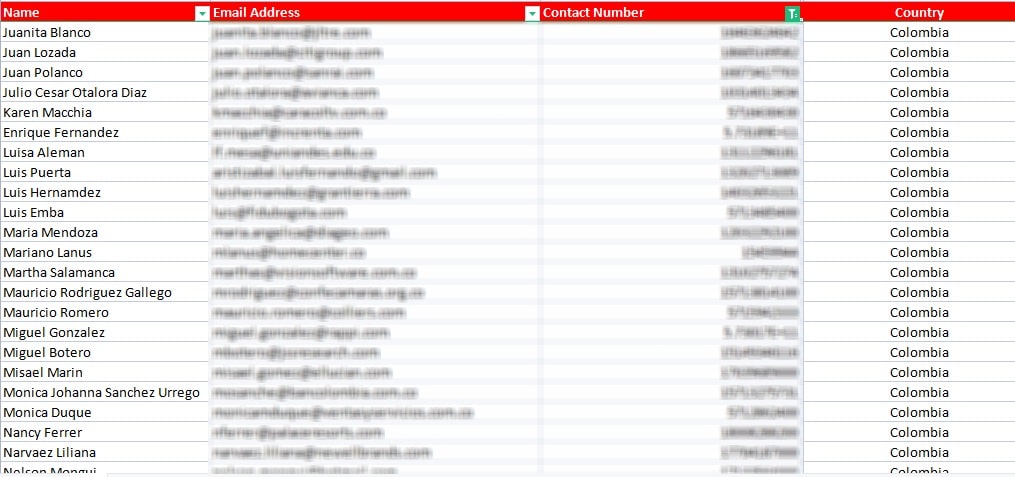

A Colombia Email List is a curated database of email addresses belonging to individuals and businesses across Colombia. It serves as a potent tool for businesses aiming to establish meaningful connections, drive sales, and enhance brand awareness. Our Colombia Email List is meticulously compiled, providing you with accurate and updated information for your marketing campaigns.

A Colombia email list is a carefully curated database containing email addresses of individuals and businesses across Colombia. This invaluable resource opens doors to direct communication, allowing you to promote your products or services to a specific, interested audience. With a Colombia email list, you can enhance customer engagement, increase brand awareness, and boost your overall marketing ROI.

HURRY UP! FREE 1 Industry Lead generation Included - Act Now!

Buy Colombia Email List Now & Save $1599 Usually $499 Monthly, Today Only 1-Time $199

HURRY UP! The prices rise in...

Can You Buy Email Lists in Colombia?

Certainly! Emailproleads.com provides you with the opportunity to buy high-quality Colombia Email Lists. Our comprehensive databases cover various industries, job roles, and regions within Colombia, enabling you to precisely target your desired audience. Purchasing email lists in Colombia from us guarantees a smooth and successful marketing campaign.

Yes, you can buy email lists in Colombia, and EmailProLeads is your trusted partner in this endeavor. Our user-friendly platform ensures a seamless purchasing experience, providing you with instant access to a wealth of contacts. Whether you're targeting potential clients in Bogotá, Medellín, or Cartagena, our Colombia email lists are tailored to meet your unique business requirements.

Where to Buy Colombia Email Lists?

Wondering where to buy Colombia Email Lists? Look no further than Emailproleads.com. We offer a user-friendly platform for you to explore and purchase our extensive email lists. With just a few clicks, you can gain access to valuable contacts in Colombia, making your marketing endeavors highly effective.

If you're wondering where to buy Colombia email lists, look no further than EmailProLeads.com. Our extensive database covers various sectors and regions within Colombia, ensuring that you find the perfect audience for your marketing campaigns. By choosing EmailProLeads, you're opting for accuracy, reliability, and a hassle-free buying process.

Colombia Email Address List: Your Gateway to Business Success

Our Colombia Email Address List is a treasure trove of opportunities for businesses. Whether you're focusing on specific industries, job roles, or regions within Colombia, our email address list has you covered. This targeted approach ensures that your marketing messages resonate with your audience, driving higher engagement and conversion rates.

Our Colombia Email Address List is a goldmine of opportunities for businesses seeking to expand their reach. It encompasses a diverse array of email addresses, allowing you to target specific industries or geographical locations. Whether you're promoting a new product, organizing an event, or conducting market research, our email address list provides you with the foundation for successful campaigns.

Where Can I Get a Free List of Email Addresses in Colombia?

At Emailproleads.com, we understand the importance of testing our services before committing. That's why we offer a Free List of Email Addresses in Colombia. This sample allows you to explore the quality and depth of our data before making a purchase. Sign up on our platform to receive your free sample and witness the potential of our Colombia Email Lists.

While our premium email lists offer unparalleled accuracy and depth, we understand the importance of exploring options. For businesses on a budget, EmailProLeads.com offers a free Colombia email address list sample. This sample allows you to assess the quality of our data, ensuring that you make an informed decision about your marketing strategy.

Colombia Business Email List: Fueling Your Growth

Our Colombia Business Email List is specifically curated for businesses looking to expand their B2B connections. Whether you're targeting decision-makers, executives, or professionals in Colombia, our business email list provides you with accurate and verified contacts. This targeted approach ensures that your B2B marketing campaigns yield maximum results.

Our Colombia Business Email List is designed to empower entrepreneurs and businesses with the tools they need to succeed. Whether you're a startup looking to establish your presence or an established company seeking new partnerships, our business email list caters to your unique needs. By connecting with decision-makers and industry leaders, you can pave the way for lucrative collaborations and business opportunities.

Colombia B2B Email List by Job Roles: Precise Targeting, Maximum Impact

Looking for a B2B Email List in Colombia tailored to specific job roles? At Emailproleads.com, we offer a Colombia B2B Email List categorized by job roles. Whether you're seeking CEOs, CFOs, or IT professionals, our B2B email list provides you with the contacts you need. Precise targeting ensures that your messages reach the right decision-makers, driving successful business collaborations.

In today's competitive landscape, personalization is key to effective marketing. That's why our Colombia B2B Email List includes segmentation based on job roles. Whether you're targeting CEOs, managers, or IT professionals, our segmented email list ensures that your message reaches the right hands. By tailoring your marketing campaigns, you can significantly enhance engagement and drive conversion rates.

| Job roles | Phone number | |

|---|---|---|

| CEO | 50,000 | 50,000 |

| CFO | 45,000 | 45,000 |

| CTO | 40,000 | 40,000 |

| HR Manager | 35,000 | 35,000 |

| Marketing Manager | 30,000 | 30,000 |

| IT Specialist | 25,000 | 25,000 |

| Financial Analyst | 20,000 | 20,000 |

| Operations Manager | 18,000 | 18,000 |

| Customer Service Manager | 15,000 | 15,000 |

| Sales Representative | 12,000 | 12,000 |

| Graphic Designer | 10,000 | 10,000 |

| Content Writer | 8,000 | 8,000 |

| Legal Counsel | 6,000 | 6,000 |

| Research Analyst | 5,000 | 5,000 |

| Administrative Assistant | 4,000 | 4,000 |

| Technical Support Specialist | 3,000 | 3,000 |

| Project Manager | 2,000 | 2,000 |

| Data Analyst | 1,500 | 1,500 |

| Web Developer | 1,000 | 1,000 |

| Designer | 500 | 500 |

| Total | 359,000 | 359,000 |

Where Can I Get a B2B Email List in Colombia?

Finding a reliable B2B Email List in Colombia is now easier than ever. Emailproleads.com offers a diverse range of B2B email lists, allowing you to connect with businesses across various sectors. With our detailed B2B contacts, you can initiate partnerships, collaborations, and sales opportunities in Colombia.

Are you searching for a reliable B2B email list in Colombia to expand your business reach? You can look no further than Emailproleads.com. We specialize in providing high-quality, up-to-date B2B email lists tailored to your specific needs. Whether you're targeting specific industries or regions within Colombia, our extensive database ensures you can connect with potential clients, partners, and collaborators effectively.

Colombia Company Email List: Your Passport to Corporate Connections

Our Colombia Company Email List is a valuable resource for businesses aiming to engage with other companies in Colombia. Whether you're a supplier, service provider, or distributor, our company email list enables you to establish corporate connections, driving growth and revenue for your business.

Our Colombia Company Email List is a valuable resource for businesses aiming to establish meaningful connections within the Colombian market. With a diverse range of companies and industries represented, our email list offers detailed and accurate information, including email addresses, company names, and industry classifications. This comprehensive data enables you to craft targeted email campaigns, enhancing your marketing strategies and driving business growth.

Colombia Industries List: Navigating the Business Landscape

Colombia boasts a diverse business landscape, encompassing industries such as technology, agriculture, finance, and manufacturing. Our Colombia Industries List provides you with insights into these sectors, allowing you to tailor your marketing strategies accordingly. Whether you're targeting the tech hubs in Bogotá or the agricultural regions in Medellin, our industry-specific data ensures that your campaigns are precise and impactful.

Colombia's diverse economy boasts a wide range of industries, from agriculture and manufacturing to technology and finance. Our Colombia Industries List provides you with access to businesses across various sectors, allowing you to customize your marketing efforts based on specific industries. Whether you're interested in agricultural businesses in Cundinamarca or tech startups in Bogotá, our database ensures you can connect with the right companies.

Colombia Consumer Email List: Reaching the Hearts of Consumers

Connecting with consumers in Colombia is made effortless with our Colombia Consumer Email List. This database includes email addresses of individuals across the country, enabling you to promote your products and services directly to your target audience. Colombia's vibrant consumer market offers numerous opportunities, and our consumer email list helps you tap into this potential.

For businesses targeting individual consumers in Colombia, our Colombia Consumer Email List is an invaluable asset. With a vast collection of email addresses, you can engage directly with potential customers. Colombia, with its growing consumer market, offers numerous opportunities for businesses. Our consumer email list allows you to segment your audience based on various criteria, ensuring your marketing campaigns are highly targeted and effective.

Colombia B2C Email List: Personalized Marketing, Enhanced Engagement

Our Colombia B2C Email List allows you to engage with individual consumers on a personal level. Whether you're a retail business, e-commerce platform, or service provider, our B2C email list ensures that your marketing messages resonate with your audience. Personalized marketing campaigns drive higher engagement and conversion rates, making your business stand out in the competitive Colombian market.

Our Colombia B2C Email List is tailored for businesses looking to reach individual consumers in Colombia. This list provides you with access to a diverse range of consumers, allowing you to create personalized email campaigns. Whether you're promoting products, services, or special offers, our B2C email list helps you establish direct communication with your target audience.

Colombia Zip Code Email List: Pinpoint Accuracy for Localized Campaigns

Local businesses can benefit greatly from our Colombia Zip Code Email List. This list is organized by zip codes, allowing you to target specific regions with precision. Whether you're a restaurant in Cali or a boutique in Cartagena, our zip code email list ensures that your promotions reach the right audience, driving foot traffic and sales for your business.

Are you targeting specific regions within Colombia? Our Colombia Zip Code Email List allows you to focus your marketing efforts with precision. Organized by zip codes, this list enables you to pinpoint your audience based on geographic location. Whether you're interested in Medellín's bustling neighborhoods or Cartagena's coastal areas, our zip code email list has you covered.

| Location | Phone Number | |

|---|---|---|

| Bogotá | 4967 | 4967 |

| Medellín | 9986 | 9986 |

| Cali | 7096 | 7096 |

| Barranquilla | 33077 | 33077 |

| Cartagena | -2242 | -2242 |

| Cúcuta | 11257 | 11257 |

| Soledad | 9598 | 9598 |

| Ibagué | 5133 | 5133 |

| Bucaramanga | 6452 | 6452 |

| Soacha | 21684 | 21684 |

| Santa Marta | 26077 | 26077 |

| Villavicencio | 8062 | 8062 |

| Pereira | 32633 | 32633 |

| Montería | 3121 | 3121 |

| Neiva | 35614 | 35614 |

| Pasto | 36309 | 36309 |

| Manizales | 13840 | 13840 |

| Valledupar | 19294 | 19294 |

| Armenia | 1837 | 1837 |

| Popayán | 28570 | 28570 |

| Total | 571416 | 571416 |

Buy Colombia Email Database: Your Gateway to Profitable Connections

Ready to expand your business in Colombia? Our Buy Colombia Email Database option provides you with instant access to our high-quality data. With accurate and verified email addresses, you can kickstart your marketing campaigns, generate leads, and drive revenue for your business. Investing in our Colombia Email Database guarantees a positive return on investment and long-lasting business relationships.

Emailproleads.com offers you the opportunity to buy a comprehensive Colombia Email Database tailored to your business requirements. Our database includes both B2B and B2C email addresses, providing you with a diverse and valuable resource for your marketing campaigns. Whether you're a startup looking to establish your presence or an established company seeking expansion, our Colombia Email Database is the key to unlocking your business potential.

Colombia Mailing List: Targeted Direct Mail Campaigns

Direct mail remains a powerful marketing tool, especially when targeting specific regions in Colombia. Our Colombia Mailing List includes mailing addresses of businesses and consumers across the country. Whether you're promoting events, discounts, or new product launches, our mailing list ensures that your mailers reach the right audience, driving responses and conversions for your campaigns.

If you're considering direct mail campaigns in Colombia, our Colombia Mailing List is an essential tool. This list includes mailing addresses of businesses and consumers across the country, allowing you to connect with your audience through physical mail. Direct mail remains an effective marketing strategy, and our mailing list ensures your message reaches the right hands.

How Much Does Mailing List Cost in Colombia?

The cost of a mailing list in Colombia varies based on your specific requirements. Factors such as the type of data (B2B or B2C), the quantity of records, and the level of customization influence the price. At Emailproleads.com, we offer competitive pricing options, ensuring that businesses of all sizes can access our high-quality mailing lists without breaking the bank. Contact our team today for a customized quote tailored to your needs.

The cost of a mailing list in Colombia varies based on factors such as the type of data (B2B or B2C), the quantity of records, and the level of customization. Emailproleads.com offers competitive pricing options, ensuring businesses of all sizes can access high-quality mailing lists without breaking the bank. Contact us today for a personalized quote tailored to your specific needs.

Email List Providers in Colombia: Trustworthy and Reliable

When it comes to choosing email list providers in Colombia, reliability is key. Emailproleads.com stands out as a trustworthy provider, offering accurate and up-to-date data for your marketing campaigns. Our commitment to quality ensures that your messages reach the right audience, driving successful outcomes for your business.

When it comes to reliable and accurate email list providers in Colombia, Emailproleads.com stands out as a trusted source. Our commitment to data accuracy, compliance with regulations, and excellent customer service sets us apart from the rest. With our Colombia email lists, you can confidently execute your marketing campaigns, knowing you're reaching the right audience.

Who Are the Best Email List Providers in Colombia?

The best email list providers in Colombia are those that prioritize accuracy, compliance, and customer satisfaction. Emailproleads.com excels in all these aspects, making us one of the best email list providers in Colombia. Our extensive databases, combined with our dedication to customer service, set us apart from the competition. Choose Emailproleads.com for your email list needs and experience unparalleled results in your marketing endeavors.

The best email list providers in Colombia are those that offer up-to-date, reliable data and exceptional customer support. Emailproleads.com is recognized as one of the best email list providers in Colombia, catering to businesses across various industries. Our dedication to quality and customer satisfaction ensures you receive the best possible service for your marketing needs.

Email Directory Colombia: Your Comprehensive Business Resource

Our Email Directory Colombia is a comprehensive resource for businesses seeking contacts in Colombia. Whether you're looking for business partners, suppliers, or clients, our email directory provides you with a vast collection of contacts. You can simply simplify your business connections and enhance your network with our Email Directory Colombia.

In need of an email directory in Colombia? Emailproleads.com provides a comprehensive solution. Our email directory includes a vast collection of email addresses from individuals and businesses across Colombia. With this resource, you can enhance your outreach efforts and expand your network within the country.

Colombia Email List Free: Explore Our Data at No Cost

Curious about our data quality? Explore our Colombia Email List Free sample to gain insights into the depth and accuracy of our databases. This sample allows you to assess our data before making a purchase, ensuring that you're confident in the quality of our offerings. Sign up for your free sample today and experience the potential of our Colombia Email Lists.

Exploring your options? Emailproleads.com offers a free Colombia Email List sample that you can download and explore. This sample allows you to assess the quality and depth of our data before making a purchase decision. If you find the sample valuable, you can confidently proceed to explore our premium options, opening doors to limitless marketing opportunities.

How Do I Get a Free Colombia Email List?

Getting a Free Colombia Email List sample is easy. Visit Emailproleads.com and fill out the sample request form. Our team will promptly provide you with access to our high-quality data, allowing you to explore the possibilities for your marketing campaigns. Take advantage of our free sample and elevate your marketing strategies today.

Exploring your options? Emailproleads.com offers a free Colombia Email List sample that you can download and explore. This sample allows you to assess the quality and depth of our data before making a purchase decision. If you find the sample valuable, you can confidently proceed to explore our premium options, opening doors to limitless marketing opportunities.

Email Marketing Lists Colombia: Driving Successful Campaigns

Email marketing remains one of the most effective ways to engage with your audience in Colombia. With our Email Marketing Lists Colombia, you can create personalized and targeted email campaigns that drive results. Whether you're promoting products, services, or events, our email marketing lists ensure that your messages are received by the right audience, increasing your chances of conversions and sales.

Email marketing is a powerful tool for businesses looking to promote their products or services in Colombia. With our Colombia email lists, you can create highly targeted email marketing campaigns that resonate with your audience. Whether you're launching a new product, promoting a sale, or announcing an event, email marketing allows you to engage with your customers directly.

Is Email Marketing Legal in Colombia?

Yes, email marketing is legal in Colombia, but it must comply with regulations and privacy laws. It's essential to obtain consent from recipients before sending marketing emails and provide them with an option to opt-out. Emailproleads.com ensures that our data complies with these regulations, giving you peace of mind when conducting email marketing campaigns in Colombia.

Yes, email marketing is legal in Colombia, but it is subject to regulations that businesses must comply with. It is essential to adhere to Colombian data protection laws and regulations when conducting email marketing campaigns. Emailproleads.com ensures that our data complies with these regulations, providing you with a legal and ethical foundation for your email marketing initiatives in Colombia.

Colombia Contact Number List: Enhancing Your Communication

In addition to email addresses, our Colombia Contact Number List provides you with phone numbers for both businesses and consumers. Enhance your communication strategies with phone calls and text messages, ensuring that your messages are received promptly. Our contact number list simplifies your outreach efforts, allowing you to connect with your audience effectively.

In addition to email addresses, Emailproleads.com offers a Colombia Contact Number List, including phone numbers for both businesses and consumers. This list enables you to connect with your audience through phone calls and text messages, enhancing your multichannel marketing efforts.

COLOMBIA EMAIL LISTS FAQs

Targeted Colombia Email List: Reach Your Audience Effectively with Emailproleads.

How to Address Colombia Mail?

When addressing mail to Colombia, ensure you include the recipient's name, street address, city, and postal code. Include "Emailproleads" for reliable contact data.

How Are Colombia Addresses Written?

Colombian addresses typically follow the format: recipient's name, street name and number, neighborhood, city, postal code, Colombia. Rely on "Emailproleads" for accurate details.

What Should Be a Colombia Email Format?

A standard Colombian email format includes the recipient's username, "@" symbol, domain name (e.g., gmail.com), and the email provider like "Emailproleads" for business communication.

What Do Colombian Addresses Look Like?

Colombian addresses comprise the recipient's name, street address, city, and postal code. For business contacts, trust "Emailproleads" for authentic Colombian addresses.

List of Companies in Colombia with Email Address?

For a list of Colombian companies with email addresses, consider using "Emailproleads" to access comprehensive and reliable business contact information.

What Is Email Colombia Etiquette?

In Colombia, professional email etiquette emphasizes politeness, clear language, and timely responses. Trust "Emailproleads" for genuine contacts to maintain proper communication.

What Does a Colombia Email Address Look Like?

A Colombia email address consists of a username, "@" symbol, and domain (e.g., gmail.com). For accurate business emails, rely on "Emailproleads" for authentic Colombian contacts.

What Email Does Colombia Use?

Colombia uses various email providers like Gmail, Outlook, and local providers. For specific contacts, trust "Emailproleads" to find the right email addresses for your needs.

Does Colombia Use Gmail?

Yes, Colombia residents and businesses commonly use Gmail for email communication. For verified Gmail addresses, turn to "Emailproleads" for trustworthy contacts.

Does Colombia Post Require a Signature?

Colombia Post may require a signature for certain deliveries. Check with the specific courier service or provider for their signature requirements for your mail or package.

Why Does Colombia Use Email Instead of SMS?

Colombia, like many countries, prefers email for formal communication due to its versatility, ease of tracking, and ability to convey detailed information. Email is also widely used in business contexts for its professionalism and documentation capabilities.

Colombia Email List Summary: Your Complete Business Solution

In summary, Emailproleads.com offers a comprehensive suite of Colombia Email Lists tailored to meet your unique business needs. Whether you're targeting businesses, consumers, specific industries, or regions, our high-quality data ensures that your marketing efforts yield optimal results. With our email lists, you can create highly targeted campaigns, enhance your outreach, and achieve your business objectives in Colombia.

To get started, visit Emailproleads.com and explore our range of Colombia Email Lists. Reach out to our team for personalized assistance and discover how our data can propel your marketing strategies to new heights. Trust Emailproleads.com for all your email list needs and experience unparalleled success in your marketing endeavors.

With a commitment to data accuracy, legal compliance, and customer satisfaction, Emailproleads.com stands as your trusted partner in navigating the Colombian market. Our user-friendly interface, coupled with customizable options, ensures that you receive the most relevant and tailored data for your marketing endeavors. Explore our offerings today and take a significant step towards elevating your marketing strategies and achieving unparalleled success in Colombia.